kubernetes架构及核心组件(高性能网络组件)

Calico 是 kubernetes 生态系统中另一种流行的网络选择。虽然 Flannel 被公认为是最简单的选择,但 Calico 以其性能、灵活性而闻名。Calico 的功能更为全面,不仅提供主机和pod之间的网络连接,还涉及网络安全和管理。Calico CNI插件在 CNI 框架内封装了Calico的功能。

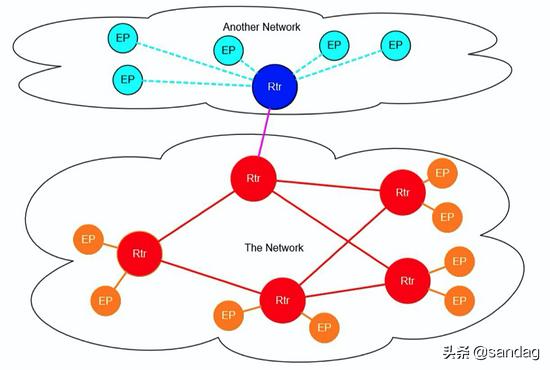

Calico 是一个基于BGP的纯三层的网络方案,与 OpenStack、Kubernetes、AWS、GCE 等云平台都能够良好地集成。Calico 在每个计算节点都利用 Linux Kernel 实现了一个高效的虚拟路由器 vRouter 来负责数据转发。每个 vRouter 都通过 BGP1 协议把在本节点上运行的容器的路由信息向整个 Calico 网络广播,并自动设置到达其他节点的路由转发规则。Calico 保证所有容器之间的数据流量都是通过IP路由的方式完成互联互通的。Calico 节点组网时可以直接利用数据中心的网络结构( L2 或者 L3),不需要额外的 NAT、隧道或者 Overlay Network,没有额外的封包解包,能够节约 CPU 运算,提高网络效率。

Calico 在小规模集群中可以直接互联,在大规模集群中可以通过额外的 BGP route reflector 来完成。

此外,Calico 基于 Iptables 还提供了丰富的网络策略,实现了 Kubernetes 的 Network Policy 策略,提供容器间网络可达性限制的功能。

2、Calico 架构及 BGP 实现BGP 是互联网上一个核心的去中心化自治路由协议,它通过维护IP路由表或“前缀”表来实现自治系统AS之间的可达性,属于矢量路由协议。不过,考虑到并非所有的网络都能支持 BGP,以及 Calico 控制平面的设计要求物理网络必须是二层网络,以确保 vRouter 间均直接可达,路由不能够将物理设备当作下一跳等原因,为了支持三层网络,Calico 还推出了 IP-in-IP 叠加的模型,它也使用 Overlay 的方式来传输数据。IPIP 的包头非常小,而且也是内置在内核中,因此理论上它的速度要比 VxLAN 快一点 ,但安全性更差。Calico 3.x 的默认配置使用的是IPIP类型的传输方案而非 BGP。

Calico 的系统架构如图所示:

Calico 主要由 Felix、Orchestrator Plugin、etcd、BIRD 和 BGP Router Reflector 等组件组成。

- Felix: Calico Agent,运行于每个节点。

- Orchestrator Plugi:编排系统(如 Kubernetes 、 OpenStack 等)以将 Calico 整合进系统中的插件,例如 Kubernetes 的 CNI。

- etcd:持久存储Calico数据的存储管理系统。

- BIRD:用于分发路由信息的BGP客户端。

- BGP Route Reflector: BGP 路由反射器,可选组件,用于较大规模的网络场景。

在 Kubernetes 中部署 Calico 的主要步骤如下:

- 修改 Kubernetes 服务的启动参数,并重启服务设置 Master 上 kube-apiserver 服务的启动参数:–allowprivileged=true(因为 calico-node 需要以特权模式运行在各 Node 上)。设置各 Node 上 kubelet 服务的启动参数:–networkplugin=cni(使用 CNI 网络插件)

- 创建 Calico 服务,主要包括 calico-node 和 calico policy controller。需要创建的资源对象如下创建 ConfigMap calico-config,包含 Calico 所需的配置参数创建 Secret calico-etcd-secrets,用于使用 TLS 方式连接 etcd。在每个 Node 上都运行 calico/node 容器,部署为 DaemonSet在每个 Node 上都安装 Calico(由 install-cni 容器完成)部署一个名为 calico/kube-policy-controller 的 Deployment,以对接 Kubernetes 集群中为 Pod 设置的 Network Policy

具体部署的步骤如下:

下载 yaml

# curl https://docs.projectcalico.org/v3.11/manifests/calico-etcd.yaml -o calico-etcd.yaml

下载完后修改配置项

- 配置连接 etcd 地址,如果使用 https,还需要配置证书。(ConfigMap,Secret)

# cat /opt/etcd/ssl/ca.pem | base64 -w 0

LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURlakNDQW1LZ0F3SUJBZ0lVRHQrZ21iYnhzWmoxRGNrbGl3K240MkI5YW5Nd0RRWUpLb1pJaHZjTkFRRUwKQlFBd1F6RUxNQWtHQTFVRUJoTUNRMDR4RURBT0JnTlZCQWdUQjBKbGFXcHBibWN4RURBT0JnTlZCQWNUQjBKbAphV3BwYm1jeEVEQU9CZ05WQkFNVEIyVjBZMlFnUTBFd0hoY05NVGt4TWpBeE1UQXdNREF3V2hjTk1qUXhNVEk1Ck1UQXdNREF3V2pCRE1Rc3dDUVlEVlFRR0V3SkRUakVRTUE0R0ExVUVDQk1IUW1WcGFtbHVaekVRTUE0R0ExVUUKQnhNSFFtVnBhbWx1WnpFUU1BNEdBMVVFQXhNSFpYUmpaQ0JEUVRDQ0FTSXdEUVlKS29aSWh2Y05BUUVCQlFBRApnZ0VQQURDQ0FRb0NnZ0VCQUtEaGFsNHFaVG5DUE0ra3hvN3pYT2ZRZEFheGo2R3JVSWFwOGd4MTR4dFhRcnhrCmR0ZmVvUXh0UG5EbDdVdG1ZUkUza2xlYXdDOVhxM0hPZ3J1YkRuQ2ZMRnJZV05DUjFkeG1KZkNFdXU0YmZKeE4KVHNETVF1aUlxcnZ2aVN3QnQ3ZHUzczVTbEJUc2NOV0Y4TWNBMkNLTkVRbzR2Snp5RFZXRTlGTm1kdC8wOEV3UwpmZVNPRmpRV3BWWnprQW1Fc0VRaldtYUVHZjcyUXZvbmRNM2Raejl5M2x0UTgrWnJxOGdaZHRBeWpXQmdrZHB1ClVXZ2NaUTBZWmQ2Q2p4YWUwVzBqVkt5RER4bGlSQ3pLcUFiaUNucW9XYW1DVDR3RUdNU2o0Q0JiYTkwVXc3cTgKajVyekFIVVdMK0dnM2dzdndQcXFnL2JmMTR2TzQ2clRkR1g0Q2hzQ0F3RUFBYU5tTUdRd0RnWURWUjBQQVFILwpCQVFEQWdFR01CSUdBMVVkRXdFQi93UUlNQVlCQWY4Q0FRSXdIUVlEVlIwT0JCWUVGRFJTakhxMm0wVWVFM0JmCks2bDZJUUpPU2Vzck1COEdBMVVkSXdRWU1CYUFGRFJTakhxMm0wVWVFM0JmSzZsNklRSk9TZXNyTUEwR0NTcUcKU0liM0RRRUJDd1VBQTRJQkFRQUsyZXhBY2VhUndIRU9rQXkxbUsyWlhad1Q1ZC9jRXFFMmZCTmROTXpFeFJSbApnZDV0aGwvYlBKWHRSeWt0aEFUdVB2dzBjWVFPM1gwK09QUGJkOHl6dzRsZk5Ka1FBaUlvRUJUZEQvZWdmODFPCmxZOCtrRFhxZ1FZdFZLQm9HSGt5K2xRNEw4UUdOVEdaeWIvU3J5N0g3VXVDcTN0UmhzR2E4WGQ2YTNIeHJKYUsKTWZna1ZsNDA0bW83QXlWUHl0eHMrNmpLWCtJSmd3a2dHcG9DOXA2cDMyZDI1Q0NJelEweDRiZCtqejQzNXY1VApvRldBUmcySGdiTTR0aHdhRm1VRDcrbHdqVHpMczMreFN3Tys0S3Bmc2tScTR5dEEydUdNRDRqUTd0bnpoNi8wCkhQRkx6N0FGazRHRXoxaTNmMEtVTThEUlhwS0JKUXZNYzk4a3IrK24KLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=

# cat /opt/etcd/ssl/server-key.pem | base64 -w 0

LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFb2dJQkFBS0NBUUVBdUZJdlNWNndHTTROZURqZktSWDgzWEVTWHhDVERvSVdpcFVJQmVTL3JnTHBFek10Cmk3enp6SjRyUGplbElJZ2ZRdVJHMHdJVXNzN3FDOVhpa3JGcEdnNXp5d2dMNmREZE9KNkUxcUIrUWprbk85ZzgKalRaenc3cWwxSitVOExNZ0k3TWNCU2VtWVo0TUFSTmd6Z09xd2x2WkVBMnUvNFh5azdBdUJYWFgrUTI2SitLVApYVEJ4MnBHOXoxdnVmU0xzMG52YzdKY2gxT2lLZ2R2UHBqSktPQjNMTm83ZnJXMWlaenprTExWSjlEV3U1NFdMCk4rVE5GZWZCK1lBTTdlSHVHTjdHSTJKdW1YL3hKczc3dnQzRjF2VStYSitzVTZ3cmRGMStULzVyamNUN1dDdmgKbkZVWlBxTk9NWUZTWUdlOVBIY1l0Y1Y1MENnUVV2NUx2OTE1elFJREFRQUJBb0lCQUY1YkRBUG1LZ1Y0cmVLRwpVbzhJeDNwZ29NUHppeVJaS2NybGdjYnFrOGt6aWpjZThzamZBSHNWMlJNdmp5TjVLMitseGkvTWwrWDFFRkRnCnUreldUdlJjdzZBQ3pYNXpRbHZ5b2hQdzh0Rlp5cURURUNSRjVMc2t1REdCUTlCNEVoTFVaSnFxOG54MFdMYlEKUWJVVW9YeC9ZajNhazJRUklOM0R5YnRYMlNpUHBPN1hVMmFiVkNzYkZBWW1uN2lweW16M25WWFRseDJuVk1sZQpmYzhXbERsd09pL3FJUThwZjNpRnowRDVoUGl5ZDY5eXp2b2ZrVk5CbCtodGFPbGdwdVNqSEFrNnhIcFpBUExTCkIxclVJaDk1RWozTUk5U3BuSnNWcUFFVHFSSmpYOHl3bFZYa2dvd3I2TXJuTnVXelRZUnlSNDY5UFVmKzhaSzQKUjE1WTdvMENnWUVBM21HdjErSmRuSkVrL1R4M2ZaMkFmOWJIazJ1dE5FemxUakN5YlZtYkxKamx0M1pjSG96UgphZVR2azJSQ0Q4VDU0NU9EWmIzS3Zxdzg2TXkxQW9lWmpqV3pTR1VIVHZJYTRDQ3lMenBXaVNaQkRHSE9KbDBtCk9nbnRRclFPK0UwZjNXOHZtbkp0NGoySGxMWHByL1R6Zk12R05lTWVkSUlIMC8xZXV0WkJjNnNDZ1lFQTFDK0gKaDVtQ0pnbllNcm5zK3dZZ2lWVTJFdjZmRUc2VGl2QU5XUUlldVpHcDRoclZISXc0UTV3SHhZNWgrNE15bXFORAprMmVDYU15RjFxb1NCS1hOckFZS3RtWCtxR3ltaVBpWlRJWEltZlppcENocWl3dm1udjMxbWE1Njk2NkZ6SjdaCjJTLzZkTGtweWI2OTUxRTl5azRBOEYzNVdQRlY4M01DanJ1bjBHY0NnWUFoOFVFWXIybGdZMXNFOS95NUJKZy8KYXZYdFQyc1JaNGM4WnZ4azZsOWY4RHBueFQ0TVA2d2JBS0Y4bXJubWxFY2I4RUVHLzIvNXFHcG5rZzh5d3FXeQphZ25pUytGUXNHMWZ0ajNjTFloVnlLdjNDdHFmU21weVExK2VaY00vTE81bkt2aFdGNDhrRUFZb3NaZG9qdmUzCkhaYzBWR1VxblVvNmxocW1ZOXQ3bndLQmdGbFFVRm9Sa2FqMVI5M0NTVEE0bWdWMHFyaEFHVEJQZXlkbWVCZloKUHBtWjZNcFZ4UktwS3gyNlZjTWdkYm5xdGFoRnhMSU5SZVZiQVpNa0wwVnBqVE0xcjlpckFoQmUrNUo0SWY4Rgo2VFIxYzN2cHp6OE1HVjBmUlB3Vlo0bE9HdC9RbFo1SUJjS1FGampuWXdRMVBDOGx1bHR6RXZ3UFNjQ1p6cC9KCitZOU5Bb0dBVkpybjl4QmZhYWF5T3BlMHFTTjNGVzRlaEZyaTFaRUYvVHZqS3lnMnJGUng3VDRhY1dNWWdSK20KL2RsYU9CRFN6bjNheVVXNlBwSnN1UTBFanpIajFNSVFtV3JOQXNGSVJiN0Z6YzdhaVQzMFZmNFFYUmMwQUloLwpXNHk0OW5wNWNDWUZ5SXRSWEhXMUk5bkZPSjViQjF2b1pYTWNMK1dyMVZVa2FuVlIvNEE9Ci0tLS0tRU5EIFJTQSBQUklWQVRFIEtFWS0tLS0tCg==

# cat /opt/etcd/ssl/server.pem | base64 -w 0

LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURyekNDQXBlZ0F3SUJBZ0lVZUFZTHdLMkxVdnE0V2ZiSG92cTlzVS8rWlJ3d0RRWUpLb1pJaHZjTkFRRUwKQlFBd1F6RUxNQWtHQTFVRUJoTUNRMDR4RURBT0JnTlZCQWdUQjBKbGFXcHBibWN4RURBT0JnTlZCQWNUQjBKbAphV3BwYm1jeEVEQU9CZ05WQkFNVEIyVjBZMlFnUTBFd0hoY05NVGt4TWpBeE1UQXdNREF3V2hjTk1qa3hNVEk0Ck1UQXdNREF3V2pCQU1Rc3dDUVlEVlFRR0V3SkRUakVRTUE0R0ExVUVDQk1IUW1WcFNtbHVaekVRTUE0R0ExVUUKQnhNSFFtVnBTbWx1WnpFTk1Bc0dBMVVFQXhNRVpYUmpaRENDQVNJd0RRWUpLb1pJaHZjTkFRRUJCUUFEZ2dFUApBRENDQVFvQ2dnRUJBTGhTTDBsZXNCak9EWGc0M3lrVi9OMXhFbDhRa3c2Q0ZvcVZDQVhrdjY0QzZSTXpMWXU4Cjg4eWVLejQzcFNDSUgwTGtSdE1DRkxMTzZndlY0cEt4YVJvT2M4c0lDK25RM1RpZWhOYWdma0k1Snp2WVBJMDIKYzhPNnBkU2ZsUEN6SUNPekhBVW5wbUdlREFFVFlNNERxc0piMlJBTnJ2K0Y4cE93TGdWMTEva051aWZpazEwdwpjZHFSdmM5YjduMGk3Tko3M095WElkVG9pb0hiejZZeVNqZ2R5emFPMzYxdFltYzg1Q3kxU2ZRMXJ1ZUZpemZrCnpSWG53Zm1BRE8zaDdoamV4aU5pYnBsLzhTYk8rNzdkeGRiMVBseWZyRk9zSzNSZGZrLythNDNFKzFncjRaeFYKR1Q2alRqR0JVbUJudlR4M0dMWEZlZEFvRUZMK1M3L2RlYzBDQXdFQUFhT0JuVENCbWpBT0JnTlZIUThCQWY4RQpCQU1DQmFBd0hRWURWUjBsQkJZd0ZBWUlLd1lCQlFVSEF3RUdDQ3NHQVFVRkJ3TUNNQXdHQTFVZEV3RUIvd1FDCk1BQXdIUVlEVlIwT0JCWUVGTHNKb2pPRUZGcGVEdEhFSTBZOEZIUjQvV0c4TUI4R0ExVWRJd1FZTUJhQUZEUlMKakhxMm0wVWVFM0JmSzZsNklRSk9TZXNyTUJzR0ExVWRFUVFVTUJLSEJNQ29BajJIQk1Db0FqNkhCTUNvQWo4dwpEUVlKS29aSWh2Y05BUUVMQlFBRGdnRUJBQzVOSlh6QTQvTStFRjFHNXBsc2luSC9sTjlWWDlqK1FHdU0wRWZrCjhmQnh3bmV1ZzNBM2l4OGxXQkhZTCtCZ0VySWNsc21ZVXpJWFJXd0h4ZklKV2x1Ukx5NEk3OHB4bDBaVTZWUTYKalFiQVI2YzhrK0FhbGxBTUJUTkphY3lTWkV4MVp2c3BVTUJUU0l3bmk5RFFDUDJIQStDNG5mdHEwMGRvckQwcgp5OXVDZ3dnSDFrOG42TkdSZ0lJbVl6dFlZZmZHbEQ3R3lybEM1N0plSkFFbElUaElEMks1Y090M2dUb2JiNk5oCk9pSWpNWVAwYzRKL1FTTHNMNjZZRTh5YnhvZ2M2L3JTYzBIblladkNZbXc5MFZxY05oU3hkT2liaWtPUy9SdDAKZHVRSnU3cmdkM3pldys3Y05CaTIwTFZrbzc3dDNRZWRZK0c3dUxVZ21qNWJudkU9Ci0tLS0tRU5EIENFUlRJRklDQVRFLS0tLS0K

将上述 Base64 加密的字符串修改至文件中声明:ca.pem 对应 etcd-ca、server-key.pem 对应 etcd-key、server.pem 对应 etcd-cert;修改 etcd 证书的位置;修改 etcd 的连接地址(与 api-server 中配置 /opt/kubernetes/cfg/kube-apiserver.conf 中相同)。

# vim calico-etcd.yaml

...

apiVersion: v1

kind: Secret

type: Opaque

metadata:

name: calico-etcd-secrets

namespace: kube-system

data:

# Populate the following with etcd TLS configuration if desired, but leave blank if

# not using TLS for etcd.

# The keys below should be uncommented and the values populated with the base64

# encoded contents of each file that would be associated with the TLS data.

# Example command for encoding a file contents: cat <file> | base64 -w 0

etcd-key: 填写上面的加密字符串

etcd-cert: 填写上面的加密字符串

etcd-ca: 填写上面的加密字符串

...

kind: ConfigMap

apiVersion: v1

metadata:

name: calico-config

namespace: kube-system

data:

# Configure this with the location of your etcd cluster.

etcd_endpoints: "https://192.168.2.61:2379,https://192.168.2.62:2379,https://192.168.2.63:2379"

# If you're using TLS enabled etcd uncomment the following.

# You must also populate the Secret below with these files.

etcd_ca: "/calico-secrets/etcd-ca"

etcd_cert: "/calico-secrets/etcd-cert"

etcd_key: "/calico-secrets/etcd-key"

根据实际网络规划修改 Pod CIDR(CALICO_IPV4POOL_CIDR),与 controller-manager 配置 /opt/kubernetes/cfg/kube-controller-manager.conf 中相同。

# vim calico-etcd.yaml

...

320 - name: CALICO_IPV4POOL_CIDR

321 value: "10.244.0.0/16"

...

选择工作模式(CALICO_IPV4POOL_IPIP),支持 BGP,IPIP,此处先关闭 IPIP 模式

# vim calico-etcd.yaml

...

309 - name: CALICO_IPV4POOL_IPIP

310 value: "Never"

...

修改完后应用清单

# kubectl apply -f calico-etcd.yaml

secret/calico-etcd-secrets created

configmap/calico-config created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

serviceaccount/calico-node created

deployment.apps/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

# kubectl get pods -n kube-system

如果事先部署了 Fannel 网络组件,需要先卸载和删除 Flannel,在每个节点均需要操作。

# kubectl delete -f kube-flannel.yaml

# ip link delete cni0

# ip link delete flannel.1

# ip route

default via 192.168.2.2 dev eth0

10.244.1.0/24 via 192.168.2.63 dev eth0

10.244.2.0/24 via 192.168.2.62 dev eth0

169.254.0.0/16 dev eth0 scope link metric 1002

172.17.0.0/16 dev docker0 proto kernel scope link src 172.17.0.1

192.168.2.0/24 dev eth0 proto kernel scope link src 192.168.2.61

# ip route del 10.244.1.0/24 via 192.168.2.63 dev eth0

# ip route del 10.244.2.0/24 via 192.168.2.62 dev eth0

# ip route

default via 192.168.2.2 dev eth0

169.254.0.0/16 dev eth0 scope link metric 1002

172.17.0.0/16 dev docker0 proto kernel scope link src 172.17.0.1

192.168.2.0/24 dev eth0 proto kernel scope link src 192.168.2.61

下载工具: https://github.com/projectcalico/calicoctl/releases

# wget -O /usr/local/bin/calicoctl https://github.com/projectcalico/calicoctl/releases/download/v3.11.1/calicoctl

# chmod x /usr/local/bin/calicoctl

使用 calicoctl 查看服务状态

# ./calicoctl node status

Calico process is running.

IPv4 BGP status

-------------- ------------------- ------- ---------- -------------

| PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO |

-------------- ------------------- ------- ---------- -------------

| 192.168.2.62 | node-to-node mesh | up | 02:58:05 | Established |

| 192.168.2.63 | node-to-node mesh | up | 03:08:46 | Established |

-------------- ------------------- ------- ---------- -------------

IPv6 BGP status

No IPv6 peers found.

实际上,使用 calicoctl 查看 Node 状态就是调用系统查看的,与 netstat 效果一样。

# netstat -antp|grep bird

tcp 0 0 0.0.0.0:179 0.0.0.0:* LISTEN 62709/bird

tcp 0 0 192.168.2.61:179 192.168.2.63:58963 ESTABLISHED 62709/bird

tcp 0 0 192.168.2.61:179 192.168.2.62:37390 ESTABLISHED 62709/bird

想要查看更多的信息,需要指定配置查看 etcd 中的数据

创建配置文件

# mkdir /etc/calico

# vim /etc/calico/calicoctl.cfg

apiVersion: projectcalico.org/v3

kind: CalicoAPIConfig

metadata:

spec:

datastoreType: "etcdv3"

etcdEndpoints: "https://192.168.2.61:2379,https://192.168.2.62:2379,https://192.168.2.63:2379"

etcdKeyFile: "/opt/etcd/ssl/server-key.pem"

etcdCertFile: "/opt/etcd/ssl/server.pem"

etcdCACertFile: "/opt/etcd/ssl/ca.pem"

查看数据等操作

# calicoctl get node

NAME

k8s-master-01

k8s-node-01

k8s-node-02

查看 IPAM 的 IP 地址池:

# ./calicoctl get ippool

NAME CIDR SELECTOR

default-ipv4-ippool 10.244.0.0/16 all()

# ./calicoctl get ippool -o wide

NAME CIDR NAT IPIPMODE VXLANMODE DISABLED SELECTOR

default-ipv4-ippool 10.244.0.0/16 true Never Never false all()

Pod 1 访问 Pod 2 大致流程如下:

- 数据包从容器 1 出到达 Veth Pair 另一端(宿主机上,以 cali 前缀开头);

- 宿主机根据路由规则,将数据包转发给下一跳(网关);

- 到达 Node 2,根据路由规则将数据包转发给 cali 设备,从而到达容器 2。

路由表:

# node1

10.244.36.65 dev cali4f18ce2c9a1 scope link

10.244.169.128/26 via 192.168.31.63 dev ens33 proto bird

10.244.235.192/26 via 192.168.31.61 dev ens33 proto bird

# node2

10.244.169.129 dev calia4d5b2258bb scope link

10.244.36.64/26 via 192.168.31.62 dev ens33 proto bird

10.244.235.192/26 via 192.168.31.61 dev ens33 proto bird

其中,这里最核心的 “下一跳” 路由规则,就是由 Calico 的 Felix 进程负责维护的。这些路由规则信息,则是通过 BGP Client 也就是 BIRD 组件,使用 BGP 协议传输而来的。

不难发现,Calico 项目实际上将集群里的所有节点,都当作是边界路由器来处理,它们一起组成了一个全连通的网络,互相之间通过 BGP 协议交换路由规则。这些节点,我们称为 BGP Peer。

Calico 相关文件

# ls /opt/cni/bin/calico-ipam

/opt/cni/bin/calico-ipam

# cat /etc/cni/net.d/

10-calico.conflist calico-kubeconfig calico-tls/

# cat /etc/cni/net.d/10-calico.conflist

{

"name": "k8s-pod-network",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "calico",

"log_level": "info",

"etcd_endpoints": "https://192.168.2.61:2379,https://192.168.2.62:2379,https://192.168.2.63:2379",

"etcd_key_file": "/etc/cni/net.d/calico-tls/etcd-key",

"etcd_cert_file": "/etc/cni/net.d/calico-tls/etcd-cert",

"etcd_ca_cert_file": "/etc/cni/net.d/calico-tls/etcd-ca",

"mtu": 1440,

"ipam": {

"type": "calico-ipam"

},

"policy": {

"type": "k8s"

},

"kubernetes": {

"kubeconfig": "/etc/cni/net.d/calico-kubeconfig"

}

},

{

"type": "portmap",

"snat": true,

"capabilities": {"portMappings": true}

}

]

}

Calico 维护的网络在默认是(Node-to-Node Mesh)全互联模式,Calico 集群中的节点之间都会相互建立连接,用于路由交换。但是随着集群规模的扩大,Mesh 模式将形成一个巨大服务网格,连接数成倍增加。

这时就需要使用 Route Reflector(路由器反射)模式解决这个问题。

确定一个或多个 Calico 节点充当路由反射器(一般配置两个以上),让其他节点从这个 RR 节点获取路由信息。

具体步骤如下:

- 关闭 node-to-node BGP 网格

默认 node to node 模式最好在 100 个节点以下

添加 default BGP 配置,调整 nodeToNodeMeshEnabled 和 asNumber:bgp.yaml

# cat << EOF | calicoctl create -f -

apiVersion: projectcalico.org/v3

kind: BGPConfiguration

metadata:

name: default

spec:

logSeverityScreen: Info

nodeToNodeMeshEnabled: false

asNumber: 63400

EOF

# calicoctl apply -f bgp.yaml # 一旦执行,集群会立即断网

Successfully applied 1 'BGPConfiguration' resource(s)

# calicoctl get bgpconfig

NAME LOGSEVERITY MESHENABLED ASNUMBER

default Info false 63400

# calicoctl node status

Calico process is running.

IPv4 BGP status

No IPv4 peers found.

IPv6 BGP status

No IPv6 peers found.

ASN 号可以通过获取

# calicoctl get nodes --output=wide

NAME ASN IPV4 IPV6

k8s-master-01 (63400) 192.168.2.61/24

k8s-node-01 (63400) 192.168.2.62/24

k8s-node-02 (63400) 192.168.2.63/24

- 配置指定节点充当路由反射器

为方便让 BGPPeer 轻松选择节点,通过标签选择器匹配。

给路由器反射器节点打标签:

增加第二个路由反射器时,给新的 node 打标签并配置成反射器节点即可。

# kubectl label node k8s-node-02 route-reflector=true

node/k8s-node-02 labeled

然后配置路由器反射器节点 routeReflectorClusterID:

# calicoctl get nodes k8s-node-02 -o yaml> node.yaml

# vim node.yaml

apiVersion: projectcalico.org/v3

kind: Node

metadata:

annotations:

projectcalico.org/kube-labels: '{"beta.kubernetes.io/arch":"amd64","beta.kubernetes.io/os":"linux","kubernetes.io/arch":"amd64","kubernetes.io/hostname":"k8s-node2","kubernetes.io/os":"linux"}'

creationTimestamp: null

labels:

beta.kubernetes.io/arch: amd64

beta.kubernetes.io/os: linux

kubernetes.io/arch: amd64

kubernetes.io/hostname: k8s-node2

kubernetes.io/os: linux

name: k8s-node2

spec:

bgp:

ipv4Address: 192.168.31.63/24

routeReflectorClusterID: 244.0.0.1 # 增加集群ID

orchRefs:

- nodeName: k8s-node2

orchestrator: k8s

# ./calicoctl apply -f node.yaml

Successfully applied 1 'Node' resource(s)

现在,很容易使用标签选择器将路由反射器节点与其他非路由反射器节点配置为对等。表示所有节点都连接路由反射器节点:

# vim peer-with-route-reflectors.yaml

apiVersion: projectcalico.org/v3

kind: BGPPeer

metadata:

name: peer-with-route-reflectors

spec:

nodeSelector: all()

peerSelector: route-reflector == 'true'

# calicoctl apply -f peer-with-route-reflectors.yaml

Successfully applied 1 'BGPPeer' resource(s)

# calicoctl get bgppeer

NAME PEERIP NODE ASN

peer-with-route-reflectors all() 0

查看节点的BGP连接状态,只有本节点与路由反射器节点的连接:

# calicoctl node status

Calico process is running.

IPv4 BGP status

-------------- --------------- ------- ---------- -------------

| PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO |

-------------- --------------- ------- ---------- -------------

| 192.168.2.63 | node specific | up | 04:17:14 | Established |

-------------- --------------- ------- ---------- -------------

IPv6 BGP status

No IPv6 peers found.

Flannel host-gw 模式最主要的限制,就是要求集群宿主机之间是二层连通的。而这个限制对于 Calico 来说,也同样存在。

修改为 IPIP 模式:

也可以直接在部署 Calico 的时候直接修改

# calicoctl get ipPool -o yaml > ipip.yaml

# vi ipip.yaml

apiVersion: projectcalico.org/v3

kind: IPPool

metadata:

name: default-ipv4-ippool

spec:

blockSize: 26

cidr: 10.244.0.0/16

ipipMode: Always # 启动ipip模式

natOutgoing: true

# calicoctl apply -f ipip.yaml

# calicoctl get ippool -o wide

NAME CIDR NAT IPIPMODE VXLANMODE DISABLED SELECTOR

default-ipv4-ippool 10.244.0.0/16 true Always Never false all()

# ip route # 会增加tunl0网卡

default via 192.168.2.2 dev eth0

10.244.44.192/26 via 192.168.2.63 dev tunl0 proto bird onlink

blackhole 10.244.151.128/26 proto bird

10.244.154.192/26 via 192.168.2.62 dev tunl0 proto bird onlink

169.254.0.0/16 dev eth0 scope link metric 1002

172.17.0.0/16 dev docker0 proto kernel scope link src 172.17.0.1

192.168.2.0/24 dev eth0 proto kernel scope link src 192.168.2.61

IPIP 示意图:

Pod 1 访问 Pod 2 大致流程如下:

- 数据包从容器1 出到达 Veth Pair 另一端(宿主机上,以 cali 前缀开头);

- 进入 IP 隧道设备( tunl0 ),由 Linux 内核 IPIP 驱动封装在宿主机网络的 IP 包中(新的 IP 包目的地之是原 IP 包的下一跳地址,即 192.168.31.63 ),这样,就成了 Node1 到 Node2 的数据包;

- 数据包经过路由器三层转发到 Node2;

- Node2 收到数据包后,网络协议栈会使用 IPIP 驱动进行解包,从中拿到原始 IP 包;

- 然后根据路由规则,根据路由规则将数据包转发给 Cali 设备,从而到达容器 2。

路由表:

# node1

10.244.36.65 dev cali4f18ce2c9a1 scope link

10.244.169.128/26 via 192.168.31.63 dev tunl0 proto bird onlink

# node2

10.244.169.129 dev calia4d5b2258bb scope link

10.244.36.64/26 via 192.168.31.62 dev tunl0 proto bird onlink

不难看到,当 Calico 使用 IPIP 模式的时候,集群的网络性能会因为额外的封包和解包工作而下降。所以建议你将所有宿主机节点放在一个子网里,避免使用 IPIP。

8、Calico 网络策略部署完成 Calico 后,就可以实现 K8s 中的网络策略 NetworkPolicy,对于网络策略在前面的文章使用 Flannel Canal 实现 K8s 的 NetworkPolicy 有详细描述,这里不再赘述。:blush:

来源:山山仙人博客

,免责声明:本文仅代表文章作者的个人观点,与本站无关。其原创性、真实性以及文中陈述文字和内容未经本站证实,对本文以及其中全部或者部分内容文字的真实性、完整性和原创性本站不作任何保证或承诺,请读者仅作参考,并自行核实相关内容。文章投诉邮箱:anhduc.ph@yahoo.com